LLM Tools: The New Interface Between Code and Language - Part 1

Remember when GUI programming meant wiring up callbacks in X/Motif or WinForms? Fast-forward to 2025, and large language models are doing something surprisingly similar—with tools that look like your old code, just triggered by natural language. Welcome to the callback renaissance.

Introduction

We are witnessing an explosion in the capabilities of large language models (LLMs) every day. The need to bridge their language understanding with real-world actions has given rise to LLM tools — small, well-defined code snippets or APIs that enable models to perform actions, not just generate text.

These tools mark a fundamental shift in how we design software interactions. Traditionally, users invoked functions by clicking buttons, submitting forms, or calling APIs directly. Now, LLMs can invoke your functions through natural language, autonomously deciding when and how to call them. As a developer, you register your tool by providing metadata and a function signature. The model is then instructed — often through system prompts or function-calling protocols — to select and execute the tool when appropriate, using its internal reasoning to supply the arguments.

At its core, an LLM tool is simply a snippet of code you provide, triggered by an external agent when specific conditions are met. If that sounds familiar, it's because this mirrors a long-standing design pattern: callbacks. Whether in GUI frameworks like X/Motif, modern webhooks, or event-driven microservices, the core idea is the same—you write the code. Still, something else decides when to run it. LLM tools are just the next evolution of this idea, adapted for the world of natural language interfaces and intelligent agents.

The "Aha Moment": Realizing LLM Tools Are Just Callbacks for AI

The breakthrough realization comes when you stop thinking of LLM tools as some exotic, new AI construct—and instead see them for what they really are: callbacks, reimagined for the era of language-based computing.

Back in the days of GUI programming with X/Motif or Windows APIs, developers would register callback functions—snippets of code meant to be triggered by the framework in response to user-driven events like mouse clicks or key presses. You didn't call these functions yourself; the system did, when the conditions were right.

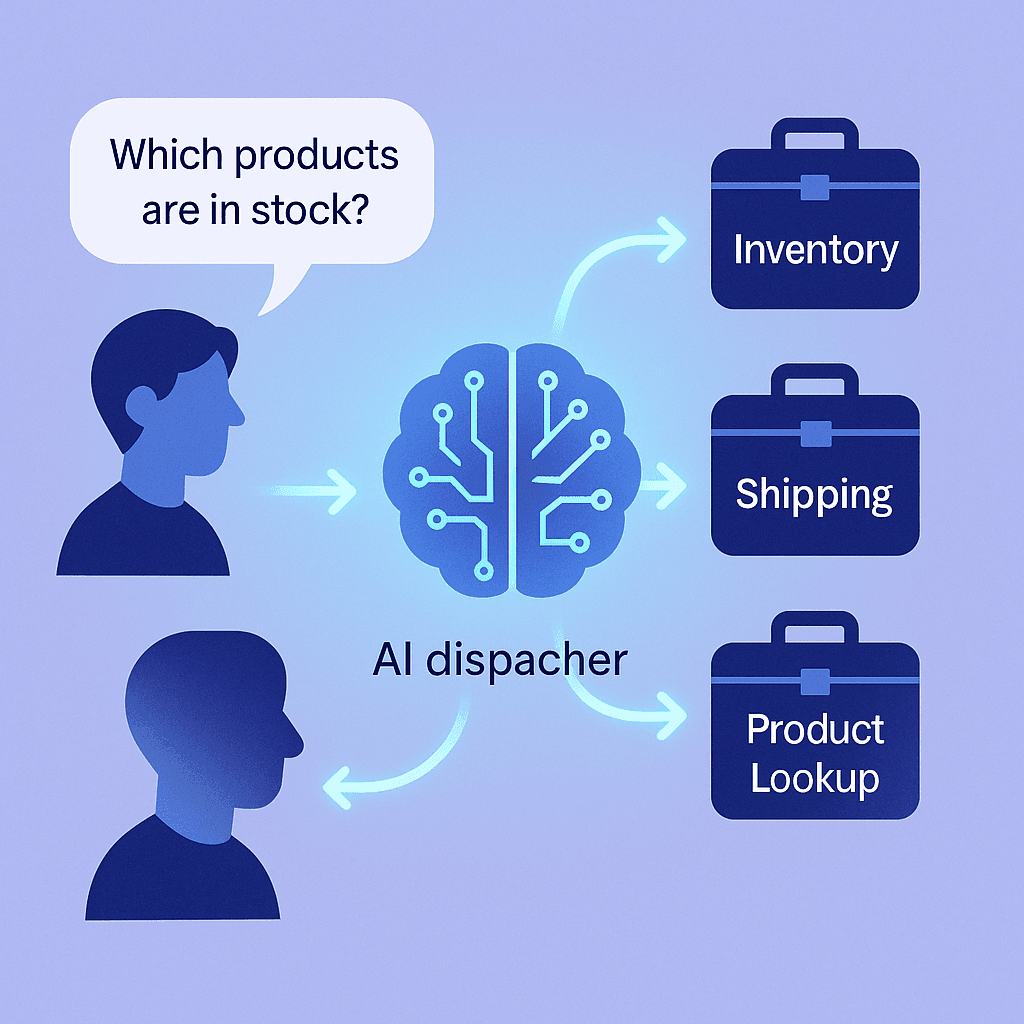

Now fast forward to today's AI agents. You define a tool, give it a function signature, describe what it does, and hand it off to the LLM. Then, much like a GUI event loop, the LLM evaluates user input and decides when your function should be invoked—automatically and contextually. The model isn't just a passive language generator anymore; it's an intelligent dispatcher of your code.

That's the “aha!"": LLM tools are callbacks—but the event handler is the language model. It's waiting for just the right natural-language moment to trigger the right piece of logic you've provided. It's callback programming all over again—but this time, the events aren't button clicks; they're questions, requests, and intentions expressed in human language.

Why This Analogy Matters for Developers

Understanding LLM tools as callbacks isn't just a clever metaphor—it's a practical mental model that clarifies how these systems work and what they don't do.

When we say LLM tools are "just snippets of code," it might sound like the LLM itself is executing your code internally, like a runtime environment. But that's not what's happening. The model doesn't run your function. It doesn't evaluate your logic. It doesn't access your system or reach into your backend. Instead, it detects when a situation arises—based on the conversation—and requests that your tool be called externally, by your own code or infrastructure. The results are then passed back to the LLM as input for the next stage of the dialogue.

That's precisely how callbacks have always worked. The GUI framework didn't run your business logic—it just notified your program when an event occurred. Your code still lived—and executed—in your own process. LLM tools work the same way: the LLM is the event handler, not the execution environment.

This perspective alleviates a lot of confusion and concern. It repositions LLMs not as black-box magicians executing unknown logic, but as smart dispatchers—language-driven orchestrators—that trigger your well-scoped, well-defined code when the context calls for it. And for developers familiar with callbacks, event handlers, or webhooks, this makes working with LLMs feel a lot more familiar—and a lot less scary.

What You'll Learn in this blog

In this blog post, we'll explain LLM tools by building an eCommerce assistant to showcase the callback pattern in action. You'll notice how:

- Simple tools often begin as basic functions, such as our inventory checker.

- Complex tools can evolve into advanced systems, such as our vector database-powered search.

- The callback pattern stays constant regardless of how complex the tool is.

- LLMs serve as smart dispatchers that recognize when to trigger your code.

Let's dive in and see how your familiar callback programming skills translate perfectly to the world of AI agents.

Code Deep Dive: Tools as Callbacks in Practice

To move from theory to practice, let's look at how this plays out in a real scenario. I'll use an e-commerce assistant example, as that's a domain I'm most familiar with.

Imagine a user types in: "I need a pair of noise-canceling headphones under $200." The AI agent, powered by OpenAI's gpt-4o-mini, can easily respond with broad recommendations—it understands the query and can describe options.

But now suppose the user follows up with: "How many units of this model do you have in stock?" That's not something the model itself knows—it has no direct access to your e-commerce database. This is precisely where tools come in.

A tool is nothing more than a function you provide that the model can ask to be called. Just like a callback, you write the code, register it with the framework (in this case, the LLM), and then the external agent decides when to invoke it. The LLM doesn't execute your code internally. Instead, it outputs a structured request saying, in effect:

"I think it's time to call this function with these arguments."

Your application intercepts that request, runs the actual code against your system, and then feeds the result back into the model. The model then continues the conversation with that new knowledge.

This is why the callback analogy is so powerful:

In GUI programming, the event loop decides when to run your handler.

In LLM programming, the model decides when to call your tool.

In both cases, the function still runs inside your code, not inside the framework itself.

With that in mind, let's look at a simple implementation:

1# Initialization

2

3load_dotenv(override=True)

4

5openai_api_key = os.getenv('OPENAI_API_KEY')

6if openai_api_key:

7 print(f"OpenAI API Key exists and begins {openai_api_key[:8]}")

8else:

9 print("OpenAI API Key not set")

10

11MODEL = "gpt-4o-mini"

12openai = OpenAI()What's happening here:

- load_dotenv(override=True) - Loads environment variables from a .env file, with override=True ensuring that any existing environment variables are replaced by the values from the file.

- API Key Check - Retrieves the OpenAI API key from environment variables and displays a confirmation, showing only the first eight characters for security.

- MODEL = "gpt-4o-mini" - Specifies the model to use. gpt-4o-mini is OpenAI's affordable, fast model ideal for development and testing. It is a lighter version of GPT-4 with similar capabilities but at a lower cost.

- openai = OpenAI() - Creates the main client object that will manage all our API calls. This client automatically uses the API key from the environment variables we just loaded.

This setup provides a clean and secure method for initializing our connection to OpenAI's API. The gpt-4o-mini model is ideal for our tool demonstrations because it's fast, reliable, and supports function calling—exactly what we need for our LLM tools.

Creating a Simple Chat Interface

Let us start by creating a simple chat interface. I will be using Gradio for creating a basic chat UI. I did write a blog post on Gradio that could be found at Beyond the Prompt: Designing AI Experiences with Gradio and I urge you to refer to this blog post if you need more information about Gradio and its capabilities.

The beauty of Gradio lies in its simplicity - with just a few lines of code, we can create a fully functional chat interface. Here's how we set up our basic chat system:

1# System prompt

2system_prompt = """

3You are a helpful assistant working for an eCommerce company that help customers find the best products.

4You must answer accurately, and if you don't know the answer, say so instead of guessing.

5You must be friendly and professional, and keep your responses concise and to the point.

6"""

7

8# Define the chat interface

9def chat(message, history):

10 messages = [{"role": "system", "content": system_prompt}] + history + [{"role": "user", "content": message}]

11 response = openai.chat.completions.create(model=MODEL, messages=messages)

12 return response.choices[0].message.content

13

14gr.ChatInterface(fn=chat, type="messages").launch()The Critical Role of System Prompts

One of the most crucial aspects of building practical LLM applications is crafting clear and precise system prompts. Notice in our system prompt above, we explicitly instruct the assistant to:

- Answer accurately and avoid guessing.

- Admit when it doesn't know something instead of making up answers.

- Be friendly and professional while keeping responses concise.

This instruction is particularly important when we introduce tools and callbacks to our LLM. The model needs to understand that it should not fabricate information or make assumptions about data it doesn't have access to. By clearly stating "if you don't know the answer, say so instead of guessing," we ensure that our LLM will properly utilize the tools we provide rather than hallucinating responses.

This foundation becomes even more critical as we progress to more complex scenarios where the LLM needs to decide when to call external tools, APIs, or databases to retrieve information it doesn't inherently possess.

The Limitation of Tool-Free Agents: A Real Example

To demonstrate the limitations of this simple LLM agent, let's examine a typical customer interaction:

Customer Question 1: "I am looking for a wide-angle lens for my Nikon D7500 camera. What options do I have?"

Agent Response: The agent provides a comprehensive, detailed answer with specific lens recommendations, including:

- Nikon AF-S DX NIKKOR 10-24mm f/3.5-4.5G ED

- Tokina AT-X 11-16mm f/2.8 Pro DX II

- Sigma 10-20mm f/3.5 EX DC HSM

- Nikon AF-P DX NIKKOR 10-20mm f/4.5-5.6G VR

- Rokinon 14mm f/2.8 IF ED UMC

The agent excels at this type of general knowledge question, drawing from its training data to provide helpful product recommendations.

Customer Question 2: "Do you have a Nikkor VR camera in stock?"

Agent Response: "I don't have access to real-time inventory data, so I can't check the stock of specific products. However, you can find NIKKOR VR lenses at various retailers or on the official Nikon website..."

The Problem

This interaction perfectly illustrates the fundamental limitation of tool-free LLM agents: they can only work with information from their training data. While the agent can provide excellent general advice about camera lenses, it becomes completely useless when asked about real-time, application-specific data, such as inventory levels. The agent's capabilities are static and bounded by its training cutoff. It cannot:

- Access live databases.

- Check current stock levels.

- Retrieve real-time information.

- Perform any external actions.

This is precisely where tools become essential—they bridge the gap between the LLM's language understanding and the dynamic, real-world data that users actually need.

Introducing Tools: Bridging the Knowledge Gap

After running our basic chat interface, we quickly discovered its limitations. While the LLM could handle general questions about e-commerce and provide helpful responses, it hit a wall when faced with specific, data-dependent queries. For instance, when a customer asked, "Do you have this part in your inventory?" the assistant could only respond with "I don't have access to your inventory database" or similar unhelpful responses. This is where tools come into play. Tools enable us to extend the LLM's capabilities by providing it with access to external functions, APIs, and data sources. Instead of being limited to its training data, the model can now call specific functions to retrieve real-time information. Let's start with a simple example by creating a basic inventory checking tool:

1# A simplistic inventory database

2inventory = {"Nikon AF-P DX Nikkor 10-20mm f/4.5-5.6G VR": 10, "Tokina AT-X 11-20mm f/2.8 PRO DX": 3, "Sigma 10-20mm f/3.5 EX DC HSM": 50}

3

4# Let us add a basic tool to check our inventory

5def check_inventory(product_name):

6 if product_name in inventory:

7 return f"We have {inventory[product_name]} units of {product_name} in stock."

8 else:

9 return f"We do not have {product_name} in stock."

10

11# There's a particular dictionary structure that's required to describe this tool function:

12check_inventory_function = {

13 "name": "check_inventory",

14 "description": "Checks our inventory and returns the number of items in our stock. Call this whenever you need to know if the item is available in our stock, for example when a customer asks 'Do you have this product in stock?' or 'How many of this product do you have?' or 'Is this product available?'",

15 "parameters": {

16 "type": "object",

17 "properties": {

18 "product_name": {

19 "type": "string",

20 "description": "The name of the product that the customer wants to check the inventory for",

21 },

22 },

23 "required": ["product_name"],

24 "additionalProperties": False

25 }

26}

27

28

29# The following defines the list of tools that can be used by the assistant.

30# Currently, it includes only the check_inventory function, but more tools can be added here as needed.

31tools = [{"type": "function", "function": check_inventory_function}]Understanding Tool Definitions

The key to making tools work with LLMs is the structure of function definitions. This JSON schema tells the model:

- What the function does (description) - This is crucial as it helps the LLM understand when to call the tool.

- What parameters it expects (parameters) - including their types and descriptions

- Which parameters are required (required) - Ensuring the LLM provides all necessary inputs

Notice how the description is written in natural language with specific examples of when to use the tool. This helps the LLM understand the context and trigger conditions for calling the function. The more descriptive and specific you are, the better the LLM will be at deciding when to use your tools. This simple inventory tool transforms our chatbot from a generic assistant into a specialized eCommerce helper that can provide real, actionable information about product availability.

Making LLMs Tool Aware: The Callback Connection

Now comes the crucial step: making our LLM aware of the tools we've defined. This is where the magic happens, and where we can see the striking parallel to traditional callback programming.

1def chat(message, history):

2 messages = [{"role": "system", "content": system_prompt}] + history + [{"role": "user", "content": message}]

3 response = openai.chat.completions.create(model=MODEL, messages=messages, tools=tools)

4

5 if response.choices[0].finish_reason == "tool_calls":

6 message = response.choices[0].message

7 tool_name = message.tool_calls[0].function.name

8

9 if tool_name == "check_inventory":

10 response = use_check_inventory_tool(message)

11

12 messages.extend([message, response])

13 response = openai.chat.completions.create(model=MODEL, messages=messages)

14

15 return response.choices[0].message.contentThe Tools Parameter: Registering Our Callbacks

The first step is to inform the LLM about the tools at its disposal. Notice how we pass the tools parameter to the openai.chat.completions.create method:

1def chat(message, history):

2 messages = [{"role": "system", "content": system_prompt}] + history + [{"role": "user", "content": message}]

3 response = openai.chat.completions.create(model=MODEL, messages=messages, tools=tools)This is essentially registering our callback functions with the LLM, much like how you would register event handlers in a GUI framework. The LLM now knows about our check_inventory tool and understands when to use it based on the description we provided during tool definition. The LLM uses its internal reasoning to evaluate the user's input against the tool descriptions. When it encounters a question like "Do you have Nikon lenses in stock?" it recognizes that this matches the semantic conditions we defined in our tool's description and decides that the check_inventory tool should be invoked.

The Tool Call Trigger: finish_reason as "tool_calls"

When the LLM determines that it needs to use a tool, it doesn't just guess or make up an answer. Instead, it sets the finish_reason to "tool_calls" - this is our callback trigger signal:

1if response.choices[0].finish_reason == "tool_calls":

2 message = response.choices[0].message

3 tool_name = message.tool_calls[0].function.nameThis is the moment where the LLM is essentially saying: "I don't have this information in my training data, but I know there's a tool that can help. Here's what I need you to do, and here are the parameters you'll need."The LLM also provides all the contextual information required to invoke the tool - in our case, it extracts the product name from the user's natural language query and passes it as a structured parameter.

The Tool Handler: Our Callback Implementation

The use_check_inventory_tool function is our callback handler - the code we write to respond to the LLM's tool call request:

1def use_check_inventory_tool(message):

2 tool_call = message.tool_calls[0]

3 arguments = json.loads(tool_call.function.arguments)

4 product_name = arguments.get('product_name')

5 inventory = check_inventory(product_name)

6 response = {

7 "role": "tool",

8 "content": json.dumps({"product_name": product_name,"inventory": inventory}),

9 "tool_call_id": tool_call.id

10 }

11 return responseThis function:

- Extracts the parameters that the LLM provided

- Invokes our actual business logic (the check_inventory function)

- Formats the response in a way the LLM can understand

- Returns the result back to the conversation flow

The Callback Loop Completes

The final step completes our callback cycle. We add both the LLM's tool call request and our tool's response back to the message history, then let the LLM generate a natural language response to the user:

1messages.extend([message, response])

2response = openai.chat.completions.create(model=MODEL, messages=messages)

3return response.choices[0].message.contentThe Callback Parallel: From GUI Events to Language Events

This entire flow mirrors the callback pattern commonly used in traditional GUI programming. In the old days, you would:

- Register a callback with the GUI framework (like onClick handlers)

- Wait for the event (user clicks a button)

- Receive the trigger (framework calls your callback)

- Process the request (your callback function executes)

- Update the interface (display results to the user)

With LLM tools, the pattern is identical:

- Register a tool with the LLM (via the tools parameter)

- Wait for the semantic event (user asks a question that matches our tool's purpose)

- Receive the trigger (finish_reason == "tool_calls")

- Process the request (our tool handler function executes)

- Update the conversation (LLM incorporates results into its response)

The only difference is that instead of mouse clicks and button presses, our "events" are natural language questions and intentions. The LLM acts as an intelligent event dispatcher, understanding context and semantics to determine when our callbacks should be triggered. This is the fundamental insight: LLM tools are callbacks for the age of natural language interfaces. We're not inventing a new programming paradigm - we're adapting one of the most successful patterns in software development to work with intelligent language models.

The Power of Tools: From "I Don't Know" to "Yes, We Have 3 Units!"

The dramatic difference becomes apparent when we add tool support to our agent. Let's examine the same customer interaction:

Customer Question: "Do you have the Tokina lens in stock?"

Before Tools (Tool-Free Agent)

Agent Response: "I don't have access to real-time inventory data, so I can't check the stock of specific products. However, you can find NIKKOR VR lenses at various retailers..."

After Tools (Tool-Enabled Agent)

Agent Response: "Yes, we have 3 units of the Tokina AT-X 11-20mm f/2.8 PRO DX lens in stock! If you're interested, I can assist you with the purchase or any questions you might have."

What Changed?

The tool-enabled agent demonstrates several key capabilities:

- Intelligent Tool Selection: The agent recognized that this was a stock-related question and decided to use the inventory checking tool

- Smart Parameter Extraction: It correctly identified "Tokina lens" and mapped it to the specific product "Tokina AT-X 11-20mm f/2.8 PRO DX" from our inventory

- Real-Time Data Access: It retrieved actual stock information (3 units) from our database

- Contextual Response: It provided a helpful, actionable answer and even offered additional assistance

The Transformation

This single example showcases the fundamental shift from a static knowledge system to a dynamic, data-driven assistant. The agent went from:

- ❌ "I don't have access to that information."

- ✅ "Yes, we have 3 units in stock!"

The callback pattern is working perfectly: the LLM recognized the need for external data, triggered our inventory tool, and seamlessly integrated the results into a natural conversation. This is exactly how callbacks have always worked—the framework (LLM) detects the right moment and calls your function with the appropriate parameters.

Part 1 Conclusion: The Foundation is Laid

We've just witnessed something remarkable: the transformation of a static chatbot into a dynamic, data-driven assistant through the power of LLM tools. In just a few lines of code, we've implemented a callback pattern that would make any X/Motif programmer feel right at home.

What We've Accomplished

- Established the callback parallel: LLM tools are simply callbacks for the age of natural language interfaces

- Built a working inventory system: From a basic dictionary to functional tool integration

- Witnessed the magic: Our agent went from "I don't have access to that data" to "Yes, we have 3 units in stock!"

- Proven the pattern scales: The same callback mechanism works whether you're building simple functions or complex systems

The Key Insight

The most important realization isn't technical—it's conceptual. LLM tools aren't some mysterious AI construct; they're callbacks that respond to language events instead of mouse clicks. Once you see this, everything else falls into place.

But Here's the Thing...

Our current inventory system is intentionally simple—just a Python dictionary with a handful of products. While it perfectly demonstrates the callback mechanism, it has some obvious limitations:

- Users must know the exact product name

- No semantic understanding of queries like "wide angle lens for Nikon"

- No ability to handle typos or variations

- Limited scalability for real-world applications

Coming in Part 2: The Evolution

What if I told you that the same callback pattern scales to sophisticated machine learning systems? In Part 2, we'll transform our basic dictionary into an intelligent, vector database-powered inventory system that can:

- Understand natural language queries like "affordable camera lens" or "weather-sealed wide angle."

- Find products even with imprecise descriptions.

- Scale to thousands of products.

- Learn from user interactions.

The beautiful part? The callback mechanism remains exactly the same. We're not changing the fundamental pattern—we're just making our tool more sophisticated. The LLM still registers the tool, still decides when to call it, and still processes the results. We're simply replacing a simple dictionary lookup with a vector similarity search.This is the power of the callback pattern: it scales from basic functions to AI-powered systems without changing the core architecture.

Why This Matters

Understanding LLM tools as callbacks isn't just an academic exercise—it's a practical framework for building real-world AI applications. Whether you're building a simple calculator or a complex recommendation engine, the pattern remains the same. The complexity lives in your tool, not in the integration. Ready to see how far we can push this pattern? In Part 2, we'll build something that would have been impossible with traditional callbacks: an AI-powered inventory system that understands not just what customers ask for, but what they actually mean.

The callback renaissance is just getting started!

Try It Yourself!

Curious to see this code in action? You can find the complete code used in this blog post on my GitHub repository: LLMToolsAndCallbacks.

The repository includes:

- Complete Jupyter notebook with all the examples from this post

- Working code for both basic and enhanced inventory systems

- Ready-to-run demos that you can execute locally

- Step-by-step setup instructions to get you started quickly

Getting Started

- Clone the repository:shellscript

1 git clone https://github.com/qubibrain/LLMToolsAndCallbacks.git

2 cd LLMToolsAndCallbacks2. Set up the environment:

1 # Install dependencies

2 uv sync

3

4 # Copy environment template

5 cp env.example .env

6 # Edit .env with your OpenAI API key3. Launch the notebook:shellscript

1 uv run Jupyter NotebookWe'd Love Your Feedback!

This callback analogy has been a game-changer for how I think about LLM tools, and I'm curious about your experience:

- Does the callback parallel resonate with you? Have you found similar patterns in your own work?

- What other programming concepts do you see reflected in modern AI development?

- What would you like to see in Part 2 of this series?

Drop a comment below or reach out on GitHub with your thoughts, questions, or suggestions. I'd especially love to hear from developers who've worked with both traditional callbacks and modern LLM tools—your insights could help shape the next part of this exploration. The callback renaissance is just beginning, and your perspective makes it richer.

Comments (0)

Leave a Comment

Loading comments...

Post Details

Author

Anupam Chandra (AC)

Tech strategist, AI explorer, and digital transformation architect. Driven by curiosity, powered by learning, and lit up by the beauty of simple things done exceptionally well.

Published

September 5, 2025

Categories

Reading Time

19 min read